I have already booked my VCAP-DCA exam for 20th of December to have a deadline. So I again started reading through VCAP-DCA blueprint and study guide by Edward Grigson. At the same time I making a task list of things to practice in command line as it is my weakest point in VMware knowledge alongside with scripting and power-cli. Still have 35 days to cover at least basics of those.

Yesterday, I have played with iSCSI multipathing on a lab that I just rebuilt from the scratch and decided to document all the commands I used to be able to refresh this knowledge couple of the days before taking the exam. I will included all steps I took before I could have ssh access to my ESXi host. Surely, all this information can be easily found in Google, but it is nice to have all you need in one place, isn't it?

Brief description of the lab config:

I have two hosts: ESXi and ESX 4.0 and one FreeNAS virtual appliance. Each host has 3 vmnics. Vmnic0 is solely for management purpose. Vmnic 1 and 2 are configured in separate network for iSCSI connections. FreeNAS has only 1 Nic and 1 IP Address on which there are 2 iSCSI targets presented.

Let's start.

Wednesday 16 November 2011

Tuesday 15 November 2011

P2V conversion of a server with SAN disks attached

This is a short guide for those who had never done cold cloning of the server with SAN disks presented. It was my first experience and definitely not the last one so I decided to write down all steps I took.

P2V conversion process for me has been always quite simple. I always used vCenter integrated converter to run hot cloning since configuration of the the servers I had converted was quite consistent and simple, e.g. Windows 2003 or 2008, couple of volumes on local RAID1, standard windows services and applications running on the server.

However, this week I was asked to do P2V for several GIS servers running Oracle databases on SAN disks. Surely, I could run hot cloning and convert those SAN disks into standard vmdk files, however, I didn't like the size of SAN disks (each is about 1TB) for the following reasons:

- it takes too long to move such vmdk files to another VMFS

- most of the VMFS datastores don't have enough free space to accommodate such disks.

To be on the safe side I decided to google a bit for the best practices of P2V. I read couple of articles and found very useful link which I posted below. I also ran a quick test P2V conversion with a server with similar specs to my GIS servers to make sure I won't face problems with SAN disks presented as RDM disks to VM.

The adventure started when I tried to find VMware Converter's cold clone ISO. I couldn't find it anywhere until I found a post on VMware communities that explained where I can get it. First of all, your vmware account needs to have the proper license to let you download ISO file. Here you can find the latest version of VMware vCenter Converter BootCD. You can make sure it is there by clicking Show Details in Components section. Once you click Download button you will be able to select only BootCD ISO file for download.

Ok, here comes step-by-step guide that worked for me, which doesn't guarantee it will work for you the same way :) take your time to go through all the steps and make sure they are relevant and correct for your environment.

- First and most important thing is to collect as much information as possible source server. I found this very useful document called P2V Check list. Generally speaking, it is much more than a simple checklist. You can adjust it to your needs and situation, but basically, it will give you very consistent approach to P2V conversion. In my case it might be very useful if the P2V process would fail and I had to restore all services and configuration on the source physical server.

- Disable all services that are dependant on SAN disks. In my situation I had documented the status of all Oracle services first and then set their Startup type to Disabled. This is to prevent their failure or database corruption on the first power on of cloned Virtual Machine. I will restore original status once I make sure RDM disks are correctly presented and recognized by VM.

- Shut down the server and unpresent SAN disks on your storage controller. Here I made a mistake. I unpresented SAN disk too late, when VMware converter had already scanned all hardware. So, when I ran the converter task it gave me the "Unable to determine Guest Operating system". Once I booted server again from cold clone ISO the problem has gone.

- Boot server from cold clone ISO.

- Setup networking - I used DHCP settings. The main thing is to make sure your switch port where the server is connected to is configured with Auto/Auto settings because you can't change Server's NIC speed and duplex settings from VMware Converter.

- Run Import Machine task and go through wizard. I didn't notice anything special there that could make me to scratch my head. I have only selected VMware Tools installation to have all drivers for new virtual hardware installed on first start of the VM and chose not to power on the VM when the conversion is over

- Once P2V is completed revise the virtual hardware and then power on the VM

- Unpresent the SAN disks from physical server

- Uninstall all Multipath software. In my case I couldn't get my SAN disk back online on the VM until I uninstall HP DSM and Emulex software from the VM.

- Present SAN disks to all ESXi hosts. Make sure the LUN number is consistent accross all ESXi hosts.

- Shutdown VM and add SAN disks as RDM disks - you can use them either in Physical or Virtual compatibility mode. Look at your Check list from step 1 and make sure you add them on separate SCSI controller and in the correct order.

- Make sure you can see the content of the disks once you powered on VM

- Change the services you disabled in Step 2 to back Automatic startup type.

- Reboot VM and check if all applications run properly

Hope it will save you some time on such a routine task.

PS Actually, I don't think the disks' order in step 10 is important. MS Windows is supposed to automatically recognize all disks it has been ever connected to since it saves and stores all disks' signatures in registry to map partitions to drive letters. Though I didn't dare to test this idea with the production server :)

If you find this post useful please share it with any of the buttons below.

If you find this post useful please share it with any of the buttons below.

Wednesday 19 October 2011

Practical lessons - Fault Tolerant VM

I have read quite a few official docs and different bloggers' posts about VMware FT, but once again I got evidence that practical knowledge is a best way of studying.

If you find this post useful please share it with any of the buttons below.

FT seems to be amazing feature, but the long list of requirements and restrictions can stop most of the VMware enthusiasts. Nevertheless, FT is one of the topic of VCAP-DCA blueprint, so I spent half a day playing in my lab with FT and trying to enable FT just for one VM.

I wrote down some important lessons I learned about it, even though they seem very obvious you need to have some practical experience to remember them forever :)

- The identical CPU model are required - in my lab it didn't work on hosts that had Xeon 5550 and Xeon 5650

- The identical patch/firmware level is required - the same here, hosts had different firmware versions

- The VM Monitor mode has to be set to Use Intel VT-x/AMD-v for CPU virtualization and software for MMU Virtualization". - Yes, I have read that EPT and Large Pages are not supported for FT, but I didn't know it had to be switched off manually.

- VM disk can be converted to thick-eager-zeroed one only when VM is shutdown. - I was quite sure it was doable while VM was running

- Memory reservation is set to the amount of configured memory. - You need to be very careful enabling FT if you use HA with HA Admission Control configured with hosts number to tolerate. This can impact the slot size of your HA cluster.

- It is possible to configure Hyperthreading Core Sharing mode to None to provide slightly better performance for FT VM. - Actually, I am not really convinced that it provides better performance for FT enabled VM, but at least it will guarantee no other vCPU will be scheduled with FT VM vCPUs on the same physical CPU. My main idea was to make sure it is compatible with FT.

- When you disable FT the secondary VM still stays in vSphere, but it powered off and disabled. All historical data about FT performance is kept.

- When you turn off FT the secondary VM is removed and all historical data is delel.

- RDM disks are not supported in FT VM.

I have also found some minor bug when you turn off FT. When you enable FT for VM it warns you about memory reservation and says it reservation will be maintained while FT is on.

However, when I turned off FT the VM still kept memory reservation. Not a big deal, but it is just good to know you need to check VM settings after FT is switched off.

There are a lot of other problems/issues you can face with when enabling FT, but my story was not that long :)

If you find this post useful please share it with any of the buttons below.

Saturday 8 October 2011

My VCP 5 Exam experience

One year ago I passed VCP4 exam. That was a tough adventure. In August 2010 I attended my training on vSphere 4.1 and it was my very first experience with virtualization technologies. 2 weeks after the training I built my first vSphere farm on new HP Blade servers and carried out my first migration from VI 3.5 to vSphere 4.1. It was a hard time for me - zero real life experience, lack of knowledge and understanding how all these technologies work. However, by October 2010 I was ready to take my first exam for VMware. The final score of exam was just a little bit higher than the passing score, but I was still happy since I fell in love with vSphere and virtualization world.

This year I have decided to take an exam without any hands on experience with vSphere 5 for the simple reason that I don't have free time to build a proper lab and play around with new features. All my time currently is devoted to a preparation for a VCAP-DCA lab. Since this preparation has been lasting for last 6 month I can say now I have a solid understanding of main vSphere technologies. Also, I have designed and built few small to medium size vSphere farms and gained some real life experience with vSphere.

So, I have started with reading all "vSphere 5: What's New" documentation. After that I decided to take a mock exam on VMware site and then I found out that there were still some gaps in my vSphere 5 knowledge. Reading official documenation is not a big fun. Therefore I wanted to buy some good book that explains the new technologies in depth and, what is more important, provides examples of their practical implementations, which helps me a lot to memorize the material. Surprise-surprise, I found only one vSphere 5 book available on Amazon site, which is the great book by Duncan Epping and Frank Denneman, however it was not exactly what I wanted for VCP5 exam preparation. So, I had to go back to VCP 5 Blueprint and go through all links to official VMware documentation, which took me about a week.

The exam was more difficult than I expected. I was actually a bit scared after I answered first 10 questions and wasn't sure if my answers were correct in 5 of them. I was surprised there were very few questions about configuration maximums and none about licensing. That was a bit frustrating as I have spent a lot of time in debates about new licensing model :) Surprisingly, there were more questions than I expected about vCenter appliance. I can definitely say this exam requires more real life experience with vSphere than VCP4. Good thing is that probably about 80% of exam material is inherited from VCP4 exam, therefore, if you feel confident in your expertise in vSphere 4 it shouldn't take you more than a week to go through blueprint briefly, focusing only on new features. Additionally, I checked some popular virtualization blogs to get alternative view or interpretation of the particular feature and I really like reading implementation examples' stories.

A day before exam I looked for more mock exams on the Internet and found some good links here. I would say all of them are not as difficult as the real one, but at least you can find out what your gaps in vSphere 5 knowledge are and gain some confidence in yourself :)

Now I can go back to my VCAP-DCA lab. I would like to wish a good luck for this exam to all of you who has the same passion for virtualization technologies as I have. :)

If you find this post useful please share it with any of the buttons below.

If you find this post useful please share it with any of the buttons below.

Wednesday 28 September 2011

Storage IO Control and funny licensing issue

Today I have been finalizing the new vSphere installation. Basically, I have already migrated all VMs from old infrastructure to newly built one and was doing kind of fine tuning, which includes setting up monitoring, logging, etc.

One of the task was reviewing what I still remember about SIOC and whether there are any objections of enabling it on new vSphere. After reading couple of useful VMware docs (1, 2) and checking performance stats in vCenter I thouhgt it might be useful to have it enabled.

However, when I tried to enable SIOC on the first storage I faced the following error "The vSphere Enterprise Plus License for host esxi.company.local does not include SIOC. Please update your license." I was really confused. This error didn't make any sense.

I checked Licensed Features of all hosts in the new vSphere Cluster - all of them had Storage IO Control listed as one of the allowed product feature. After 30 minutes of checking all possible settings I found out that old cluster still had all these VMFS datastores presented to it and for some reason another vSphere admin added test ESXi host into the old cluster. Since there were no available Enterprise Plus license available that guy used Enterpruse license, which obviously doesn't cover SIOC.

The fix was quick and easy - unpresented VMFS LUNs off this test host and rescanned the storage configuration.

If you find this post useful please share it with any of the buttons below.

If you find this post useful please share it with any of the buttons below.

Tuesday 20 September 2011

Remote Tech Support Mode in ESXi 4.1 - unexpected feature

Just found out an interesting feature of ESXi Remote TSM. One might even call it a security risk.

Usually I utilize Remote TSM to get access to command line interface of our ESXi hosts. By default, SSH access is disabled on all hosts. So I have to open it temporarily, run commands I need and then close SSH.

However, today I forgot to close my Putty client with SSH session to the host.While checking vSphere I noticed yellow alarm sign on one of the host which was warning that Remote TSM was enabled. I immediately disabled it.

20 mins later I noticed my putty client is still connected to that host via SSH and i still had access to command line of the ESXi host. I thought there was a bug in vCenter and it probably didn't disable TSM. However, when I tried to open a new SSH session to the host Putty has failed to setup one. Be default TSM session never expires and this means you have to consider adjusting timeout value for TSM.

1. Go to the Advanced Settings of ESXi host

2. Locate "UserVars.TSMTimeOut" key

3. Adjust it to the desired value in seconds.

If you find this post useful please share it with any of the buttons below.

Usually I utilize Remote TSM to get access to command line interface of our ESXi hosts. By default, SSH access is disabled on all hosts. So I have to open it temporarily, run commands I need and then close SSH.

However, today I forgot to close my Putty client with SSH session to the host.While checking vSphere I noticed yellow alarm sign on one of the host which was warning that Remote TSM was enabled. I immediately disabled it.

20 mins later I noticed my putty client is still connected to that host via SSH and i still had access to command line of the ESXi host. I thought there was a bug in vCenter and it probably didn't disable TSM. However, when I tried to open a new SSH session to the host Putty has failed to setup one. Be default TSM session never expires and this means you have to consider adjusting timeout value for TSM.

1. Go to the Advanced Settings of ESXi host

2. Locate "UserVars.TSMTimeOut" key

3. Adjust it to the desired value in seconds.

If you find this post useful please share it with any of the buttons below.

Monday 5 September 2011

Updating network and HBA drivers on ESXi 4.x

Recently I have been working on the installation and configuration of small size vSphere farm based on HP Blade servers and HP FlexFabric virtual connect. During the testing phase of the project I faced issue with SCSI commands' failures on one of the HBAs. Despite the fact it was quite a small infrastructure - 1 enclosure, 2 FlexFabric VCs, 4 blades, 2 Brocade SAN switches, 2 Cisco 6500 switches and 1 EVA 6400, it was not really easy to say what exactly was causing an issue. Moreover, it was my first experience with FlexFabric VC. So, I was a bit disappointed with the first results of my installation.

Two days later I found the root cause - it was faulty 8Gb FC SFP. While troubleshooting I had to check everything - configuration, firmware, drivers, etc, and that is when I found out that I had no idea neither how to check ESXi drivers' version nor how to update them. Quick search on the internet didn't give me a good step-by-step procedure, so I had to go through vSphere Command-Line Interface Installation and Scripting Guide and thought it would be good to document the entire procedure for the future use.

Basically, you have 2 options - you can either use vMA or you can install vCLI on Windows computer. With vMA you will need to first copy drivers to vMA. I am a lazy person, so I temporarily opened Remote Tech Support (SSH) on each host and used my workstation to update drivers.

The first thing you need to start with is to check is a current driver version.

Networking Driver

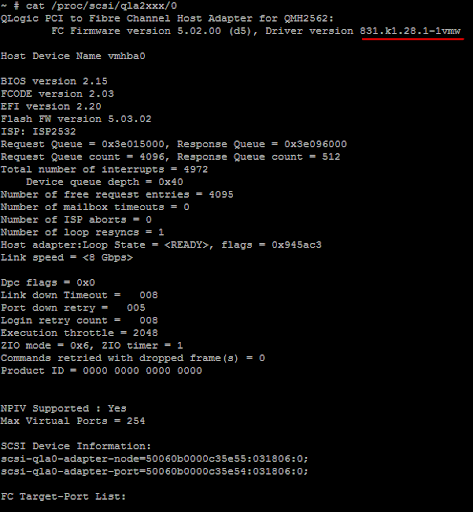

HBA Driver

1. Login into your ESXi host via SSH.

2. Change directory to /proc/scsi/qlaxxx (QLogic based) or /proc/scsi/lpfcxxx (Emulex based)

3. You will find several files (one file per each SCSI HBA adapter), in my system there are just two of them

If you find this post useful please share it with any of the buttons below.

Two days later I found the root cause - it was faulty 8Gb FC SFP. While troubleshooting I had to check everything - configuration, firmware, drivers, etc, and that is when I found out that I had no idea neither how to check ESXi drivers' version nor how to update them. Quick search on the internet didn't give me a good step-by-step procedure, so I had to go through vSphere Command-Line Interface Installation and Scripting Guide and thought it would be good to document the entire procedure for the future use.

Basically, you have 2 options - you can either use vMA or you can install vCLI on Windows computer. With vMA you will need to first copy drivers to vMA. I am a lazy person, so I temporarily opened Remote Tech Support (SSH) on each host and used my workstation to update drivers.

The first thing you need to start with is to check is a current driver version.

Networking Driver

- In vSwtich properties check the Network Adapter driver currently used . In my case it is bnx2x.

- Login into ESXi via SSH with root account.

- Run the following command - esxupdate query --v | grep bnx2x. The driver version starts right after 400. In the screenshot you can see there are 2 driver versions. These are the old driver that was deployed during the ESXi installation and the newer one which was installed with one of the ESXi firmware upgrades.

HBA Driver

1. Login into your ESXi host via SSH.

2. Change directory to /proc/scsi/qlaxxx (QLogic based) or /proc/scsi/lpfcxxx (Emulex based)

3. You will find several files (one file per each SCSI HBA adapter), in my system there are just two of them

4. Looking into the content of these files you can get driver version.

Now we are done with collection of the information. The next step is the driver upgrade proccess itself. The upgrade steps are the same for networking and HBA drivers. First you need to look for the latest verion of drivers you need at the VMware Download Center.

1. Put the ESXi server into the maintenance mode - either from GUI, or from vCLI using command vicfg-hostops.pl --server SERVERNAME --operation enter

2. From vCLI run the following command. Make sure you have put the correct server name and path.

- vihostupdate --server ESX01 --scan --bundle c:\update\hba.zip

This will tell you which of the drivers in your zip files are applicable and still not installed on your ESXi host.

3. Once the output of previous command confirms everything is fine run the next command:

- vihostupdate --server ESX01 --update --bundle c:\update\hba.zip

4. Repeat steps 2 and 3 for networking drivers.

5. Reboot the host and exit maintenance mode.

I would really appreciate any comments about quality of my posts (including my English :) ) and any wrong conclustion or mistakes I could make here.

If you find this post useful please share it with any of the buttons below.

Thursday 4 August 2011

Transparent Page Sharing - efficiency of disabling Large Pages

Finally I could find some free time to prove how efficient Transparent Page Sharing is. To do so I had to disable Large Page support on all 10 hosts of our vSphere 4.1.

Let me briefly describe our virtual server farm. We have 10 ESXi hosts with 2 Xeon 5650 CPUs in each host. 6 hosts are equiped with 96 Gb and 4 hosts - with 48 Gb, which gives us 768 Gb in total. Since all CPUs use Hardware Assisted MMU all ESXi hosts aggressively back virtual machines' RAM with Large Pages. Therefore, TPS won't kick in unless there is a memory contention on a host.

We run 165 virtual machines that have 493 Gb of assigned vRAM. According to Figure1 during the last week we have 488 Gb of consumed RAM and 33 Gb of shared RAM. When I just started my study on TPS I was very surprised to see some shared memory even though Large Pages were enabled. However, now I know that these 33 Gb are just zero pages that are shared when VM is powered on, without even being allocated in physical RAM of ESXi host.

In Figure 2 we can see that CPU usage is very low accros all cluster for the last week. According to VMware enabling Large Pages can be pretty effective with regard to CPU usage. VMware documents show about 25% lower CPU usage with LP enabled. Considering that we have had about 3% only of CPU usage I didn't see a problem to increase it to get benefits of TPS.

It took me a while to disable LP support on all hosts and then switch on and off maintenance mode on each host one by one to start taking advantage of TPS. In Figure 3 you can see memory stats for the period of time when I was still moving VMs around. The value of shared memory is not final yet, but you can see how fast ESXi hosts scan and share memory for VMs.

Since we disabled large pages support we should get higher rate of TLB misses which in turn should lead to more CPU cycles spent for walking through two page tables:

- The VM's page table to find Virtual Address to Guest OS Physical Address mapping

- Nested Page Talbe to find Guest Physical Address to Host Physical Address mapping

However, if you look at Figure 4 you won't see CPU usage increase. The spikes you can see there are mostly caused by masssive vmotions from host to host.

Unfortunately, I couldn't do all 10 hosts at once during working hours as there are some virtual MS Failover Clusters running in our vSphere and I needed to failover some cluster resources before moving cluster node off the ESXi host, therefore the last host was done only 5 hours later. You can see that the last host was done around 7-20 pm in Figure 5. After 5 hours since change has been implemented we already had 214 Gb of shared memory. Consumed memory decreased from 488 Gb to 307 Gb, thus saving us 180 Gb already. As you can see in Figure 6 there is still no CPU usage penalty due to disabled Large Pages.

In a couple of screenshots below you can see stats 24 hours since the change was done.

I have seen some posts on VMware communities where people were asking about the CPU impact with Large Pages disabled. The conclusion of this post can be a good answer to all those questions - switching off the Large Pages saved us 210 Gb and CPU usage still stays very low.

With vSphere 5 release the benefits of TPS became not significant, but I guess there will be a lot of companies staying with vSphere 4.1 for a while. Also new vRAM entitlements made TPS a bit more useful.

Nevertheless, it is difficult to generalize performance impact of disabling Large Pages. I wouldn't recommend to do so without proper test as TPS effeciency can significantly vary due to your vSphere specifics.

Update: I have been using Veeam Monitor to collect all these stats in Cluster view, but today I also checked vCenter performance stats and discovered that there is a difference in Shared and Consumed values. However, if I check Resources stats (which includes all VMs) in Veeam Monitor I get the same stats as I can see in vCenter. According to vCenter the Shared amount of memory is even higher - 280 Gb against 250 Gb in Veeam Monitor. That basically means more than 50% of physical memory savings!

If you find this post useful please share it with any of the buttons below.

If you find this post useful please share it with any of the buttons below.

Tuesday 2 August 2011

Multiple complaints forced VMware to change vRAM Entitlements

As far as I am aware right after VMware had started to receive tons of negative feedbacks regarding new licensing model of vSphere 5 they began reviewing the clients' current vRAM usage. I guess they questioned quite a lot of clients to compare the current amount of physical RAM and actual vRAM assigned and made a proper conclusions.

So here are the latest rumors and I personally truly believe they will come true.

- vRAM entitlement per Enterprise and Enterprise Plus license is going to be doubled to 64GB and 96GB corresspondingly.

- vRAM entitlement per Essentials and Essentials Plus license will be increased from 24GB to 32GB.

- Maxumum vRAM for Essentials /Essentials Plus will increased to 192GB

- Maximum 96GB per VM will be counted against your vRAM pool limit even though you might assign 1TB to this VM.

Honestly, I think it is very adequate response to all criticism VMware got for the last few weeks, but I am wondering - why VMware marketing guys couldn't foresee such reaction? Perhaps it shows that VMware was not fully aware about how their products were used in client companies. I guess now VMware will arrange such kind of resource usage reviews on a regular basis.

Taken from here: http://derek858.blogspot.com/2011/07/impending-vmware-vsphere-50-license.html

Update: Official announcement of vRAM entitlement change is expected on 3rd of August.

Update: Official announcement of vRAM entitlement change is expected on 3rd of August.

Update 1: Official announcement is here - http://blogs.vmware.com/rethinkit/2011/08/changes-to-the-vram-licensing-model-introduced-on-july-12-2011.html

Here is comparison table to the changes that were announced on 12th of July.

If you find this post useful please share it with any of the buttons below.

Here is comparison table to the changes that were announced on 12th of July.

Another nice thing to mention - vRAM usage will be calculated as an average amount used for last 12 month. So rare spiky raises in usage of vRAM will slightly increase average vRAM usage, but you will not need to pay for such spikes perpetually.

If you find this post useful please share it with any of the buttons below.

Tuesday 26 July 2011

vCenter Inventory Snapshot - Alternative way of resolving vCenter's SQL issues

Somewhere on upgrade path from VI 3.5 to vSphere 4.0 and finally to vSphere 4.1 we screwed up Performance stats in vCenter. I could still see real time data, but I couldn't select any historical data. Considering my intention to disable Large Pages on all cluster hosts and prove huge RAM savings with TPS I needed that historical data. Surely, the first website I visited was VMware and I immediately found KB which describes all symptoms I experienced. With my about zero SQL experience I followed entire procedure and it even seemed that I fixed the issue, at least I could select historical data options.

However, after checking it out for couple of days I found out that historical data is broken and discontinuous. Moreover, when I tried to use Veeam Monitor for VMware I couldn't get any CPU or Memory usage data on vCenter level. That is when I remembered that recently in someone's blog I have spotted Inventory Snapshot product in VMware Labs.

Generally speaking, Inventory Snapshot is a bunch of PowerCLI commands that retrieve all essential data from vCenter, such as Datacenters, Host, VMs, HA&DRS Cluster settings, Resource Pools, Permissions, Roles, etc. You can check the whole list of inventory yourself. Once it collected all data from vCenter it generates PowerCLI code that can reproduce your entire environment either on new vCenter or on existing one if you managed to corrupt your SQL database. As you already understood it doesn't save performance data, but in my case it was ideal tool. With all vCenter SQL databases backed up I could play around as much as I wanted.

Here is a brief overview of steps you need to pass through to get my problem solved. However, you definitely want to read Inventory Snapshot documentation because I am not inclined to reproduce official documentation :)

1. Stop vCenter service and backup its SQL database.

2. Bring vCenter service back online.

3. Run Inventory Snapshot and provide administrator's credentials

4. Once all inventory is retrieved and saved to .ps file you need to provide root's password for ESXi hosts. This is the weakest part of this product since all password are stored in plain text, although we need to remember that this is a lab product.

5. Stop vCenter again, run command line, navigate to C:\Program Files\VMware\Infrastructure\VirtualCenter\ and run vpxd.exe - b. It will fully reinitialize vCenter database, as if it was newly installed. At this step I had some warning about licenses, but seems like it was not important

6. Start vCenter

7. Run PowerCLI, login to vCenter and apply .ps file you saved before to vCenter. With 10 hosts, 4 Resource Pools and 190 VMs it took Inventory Snapshot about 20 mins to fully import back all objects.

So, now I have all types of performance graphs and it was a way simpler than going through VMware KB to fix something in SQL database.

In comments on VMware Lab site I saw people's complaints about missing permissions after they imported Inventory, but in my case I have got all permissions back correctly implemented.

Very Important to know!!! - The problem I had was that Inventory Snapshot couldn't recreate some part of dvSwitch. Luckily, it was used only for my couple of test hosts. The error says "The host proxy switch associated to dvSwitch no longer exists in vCenter". Today is too late to start investigation. Tomorrow I will try to inform developers if it is not known bug yet. Anyway, it is still kind of beta version and you need to take all precaution measures before you start playing with it.

PS. Veeam Monitor still shows zero CPU and Memory usage. According to Veeam Community forum I need to ask their support for a fix. Why can't they just post it on the website if it is well known issue?

If you find this post useful please share it with any of the buttons below.

However, after checking it out for couple of days I found out that historical data is broken and discontinuous. Moreover, when I tried to use Veeam Monitor for VMware I couldn't get any CPU or Memory usage data on vCenter level. That is when I remembered that recently in someone's blog I have spotted Inventory Snapshot product in VMware Labs.

Generally speaking, Inventory Snapshot is a bunch of PowerCLI commands that retrieve all essential data from vCenter, such as Datacenters, Host, VMs, HA&DRS Cluster settings, Resource Pools, Permissions, Roles, etc. You can check the whole list of inventory yourself. Once it collected all data from vCenter it generates PowerCLI code that can reproduce your entire environment either on new vCenter or on existing one if you managed to corrupt your SQL database. As you already understood it doesn't save performance data, but in my case it was ideal tool. With all vCenter SQL databases backed up I could play around as much as I wanted.

Here is a brief overview of steps you need to pass through to get my problem solved. However, you definitely want to read Inventory Snapshot documentation because I am not inclined to reproduce official documentation :)

1. Stop vCenter service and backup its SQL database.

2. Bring vCenter service back online.

3. Run Inventory Snapshot and provide administrator's credentials

4. Once all inventory is retrieved and saved to .ps file you need to provide root's password for ESXi hosts. This is the weakest part of this product since all password are stored in plain text, although we need to remember that this is a lab product.

5. Stop vCenter again, run command line, navigate to C:\Program Files\VMware\Infrastructure\VirtualCenter\ and run vpxd.exe - b. It will fully reinitialize vCenter database, as if it was newly installed. At this step I had some warning about licenses, but seems like it was not important

6. Start vCenter

7. Run PowerCLI, login to vCenter and apply .ps file you saved before to vCenter. With 10 hosts, 4 Resource Pools and 190 VMs it took Inventory Snapshot about 20 mins to fully import back all objects.

So, now I have all types of performance graphs and it was a way simpler than going through VMware KB to fix something in SQL database.

In comments on VMware Lab site I saw people's complaints about missing permissions after they imported Inventory, but in my case I have got all permissions back correctly implemented.

Very Important to know!!! - The problem I had was that Inventory Snapshot couldn't recreate some part of dvSwitch. Luckily, it was used only for my couple of test hosts. The error says "The host proxy switch associated to dvSwitch no longer exists in vCenter". Today is too late to start investigation. Tomorrow I will try to inform developers if it is not known bug yet. Anyway, it is still kind of beta version and you need to take all precaution measures before you start playing with it.

PS. Veeam Monitor still shows zero CPU and Memory usage. According to Veeam Community forum I need to ask their support for a fix. Why can't they just post it on the website if it is well known issue?

If you find this post useful please share it with any of the buttons below.

Friday 22 July 2011

Vlan Tagging and use cases of VLAN ID 4095

It was quite surprising for me to learn how useful VLAN 4095 can be, but let me start from the basics.

There are 3 main approches for vlan tagging - External Swich Tagging (EST), Virtual Switch Tagging (VST), and Virtual Guest Tagging (VGT).

External Swich Tagging

With this approach ESX host doesn't see any vlan tags. All of them are stripped off by external physical switch and then traffic is sent to corresponding physical port. Therefore, you will need one vmnic per vlan from the ESXi perspective.

I really don't know in which situations such config might be useful.

Virtual Switch Tagging

This is the most popular way of connecting vSphere to physical network. All traffic down to the vSwitch is tagged with vlan id. It is responsibility of vSwitch now to strip off the vlan tag and send packet to virtual machine in corresponding port group. Thereby, you can run all vlans over one vmnic, but I guess you would like to have another vmnic for redundancy. The same logic is implemented to the packet travelling from virtual machine to physical infrastructure. Packet is delivered to vSwitch and before it is sent to physical switch the packet is tagged with vlan id according to the port group memebership of originating virtual machine.

Virtual Guest Tagging

In some occasions you might need to deliver traffic with vlan tags directly to VM and let your VM decide what to do with it. To achieve such functionality you need to assign this VM to port group with vlan id 4095 and configure Guest OS Nics with needed vlans. Interresingly, as soon as you put vlan id 4095 it is automatically changed to All (4095).

I didn't pay too much attention to reading about EST and VGT for the simple reasons it is not used at all in our vSphere farms and I don't want to spend time on something I will never have hands on experience with. However, when I questioned myself how I can sniff traffc on vSwitch google lead me directly to vlan 4095.

Here are the two popular use cases for vlan 4095:

1. Traffic sniffing - from time to time you face networking problems and woud like to use some sniffing tool (or network protocol analyzer in case of Wireshark) to see what is going on behind the scene. Usually, you could enable promiscious mode on specific port group and use one of the VM in this port group to listen to the traffic. However, this leads to additional security risks as Promiscious mode lets all VMs of this port group to detect all frames passed on the vSwitch in allowed vlan. This also doesn't let you to sniff traffic from different vlans simultaneously. That's why you would prefer to use special port group with vlan 4095 and Promiscious mode enabled, where you can connect virtual adapter of VM you will use for traffic sniffing.

2. IDS - Another good use case of vlan 4095 is to provide your virutal IDS with possibility to inspect all vSwich traffic. According to "VMware VSphere and Virtual Infrastructure Security: Securing the Virtual Environment" book this is quite a common scenario for virtual IDS placement.

If you know more situations where vlan 4095 can be useful feel free to share in comments. I really like to learn vSphere features with real life examples.

If you find this post useful please share it with any of the buttons below.

Monday 18 July 2011

vSphere 5 - Virtual Storage Appliance

In one of the comments to numerous blog articles I have been reading lately I noticed quite a sceptic opinion about vSphere 5 Virtual Storage Appliance (VSA) with regard to its capabilities and price. That was quite motivating to spend a day on reading about VSA and making my own opinion about it..

So, here is a short overview of VSA from vSphere admin.

The main goal of VSA is to provide SMB companies with shared storage without buying physical NAS or SAN itself. Instead VSA will use internal storage of your ESXi hosts to create shared NFS storage that will be presented as a single entity to all hosts in your vSphere. This is how it looks with 3 node configuration.

So, here is a short overview of VSA from vSphere admin.

The main goal of VSA is to provide SMB companies with shared storage without buying physical NAS or SAN itself. Instead VSA will use internal storage of your ESXi hosts to create shared NFS storage that will be presented as a single entity to all hosts in your vSphere. This is how it looks with 3 node configuration.

Saturday 16 July 2011

Unexpected benefit of SnS

Just found out that if you are going to buy Support and Subscription (SnS) contract you are entitled for free copy of SUSE Enterprise Linux System (SLES) for VMware. This also includes free subscription to all patches and updates for SLES. Moreover, you get free technical support as well.

I guess it is a good reason for me to start studying Linux.

If you find this post useful please share it with any of the buttons below.

I guess it is a good reason for me to start studying Linux.

If you find this post useful please share it with any of the buttons below.

Thursday 14 July 2011

vSphere 5 licensing - what upgrade path to choose?

Found good licensing calculations of vSphere 5 upgrade based on quite a big production environment.

So i just followed this guy's advice and tried to make calculations for our production farm.

In a nutshel, we have 2 options:

1. Stay with Enteprise edition and upgrade to vSphere 5 for $8,535

2. Move to Enterprise Plus edition of vSphere 5 for $13700, but have some spare vRAM entitlement and all fantastic new features of vSphere 5.

It is almost obvious that option number 2 is a winner, $5000 is a not a big deal for such a great package.

Reinstatement Options for Customers with Inactive SnS Contracts:

If you find this post useful please share it with any of the buttons below.

So i just followed this guy's advice and tried to make calculations for our production farm.

- 180 Virtual Machines with 427 GB of consumed RAM.

- 10 licensed hosts HP BL460 in 1 cluster 20 CPUs, 720 GB RAM total

- 20 Enterprice Edition licenses

If we want to keep vRAM equal to current physical RAM and stay with Enterprise edition once we upgrade to vSphere 5 we will need 720GB / 32GB = 23. Since we have support contract for another 2 years the upgrade of 20 current licenses will cost us zero. We will need to by 3 more licenses only, the pricing hasn't been changed. It will cost us $2,845x3=$8,535.

There is another, more interesting way to upgrade to vSphere 5. We can move to vSphere 5 for free and upgrade to Enterprise Plus edition for $685 per license. It will cost us $685x20=$13700, and we are fully safe with 48GB vRAM entitlement of Enterprise Plus. We will be able to use up to 48GBx20=960GB.

In a nutshel, we have 2 options:

1. Stay with Enteprise edition and upgrade to vSphere 5 for $8,535

2. Move to Enterprise Plus edition of vSphere 5 for $13700, but have some spare vRAM entitlement and all fantastic new features of vSphere 5.

It is almost obvious that option number 2 is a winner, $5000 is a not a big deal for such a great package.

The only information I lack is what happens to support contract once we upgrade to vSphere 5. Do we have new support for vSphere 5? Our contract is still valid and it will be waste of money if we upgrade before it close to expiry. This information can definitely influence my primary decision. I will update post once I obtain it.

All these calculations and figures just prove my first thoughts about new vSphere 5 licensing model - it is beneficial and flexible for owners of big farms and Enterprise Plus edition licenses. It is aimed to big players, and I am afraid smaller virutalization customers can swing a bit to free MS Hyper-V solutions. It also seems like VMware decided to give up on SMB market and focus on large cloud providers.

Tthe value of main overcommitment technology TPS is significantly decreased with new licensing. The overall rate of memory overcommitment will also go down, although my assumption was that the resource overcommitment was one of the main drivers towards virtualization.

Another bad impact I can think of is that vsphere admins will try to size their VMs to fit vRAM pools and such approach can badly affect VMs performance, thus, undermining trust and confidentce in virtualization technologies.

Update 1: As I understood if you have Production support contract you are entitled for free upgrade to vSphere 5 and you will still have your support contract which will be transfered to vSphere 5 as well. I guess it is time to revise your current support contracts and their expiry dates.

Update 2: Just read something really interesting for those whose Support&Subscription contracts are expired. Normally it would force you to buy vSphere 5 plus new SnS contract. However, the information below means you can save quite a few thousands of your currency on upgrade to vSphere 5 through renewing your expired SnS contracts.

Reinstatement Options for Customers with Inactive SnS Contracts:

- The applicable SnS fees for the current contract term

- Fees that would have been paid for the period of time that the customer’s SnS contract was not active

- A 20 percent fee on the sum of the fees in the preceding two

If you find this post useful please share it with any of the buttons below.

Wednesday 13 July 2011

Vsphere 5 - new features from the admin's perspective

It is really hard to write about vSphere products more interesting and intelligent that Duncan Epping does, however, I am so excited about all I have heard and read today (and I am sure I will read more information for at least next 2 hours), so I decided to publish some short notes I could catch in a waterfall of vSphere 5 new features.

Let’s concentrate on the most important part of vSphere 5 improvements:

Here comes the biggest concern of all admins that already built some plans for upgrade to vSphere 5 – licensing!

Update 1: I should never use MS Word again for blogging. Screwed all my text formatiing.

Update 2: Guys, I will really appreaciate your feedback and comments about the blog content's quality.

Update 3: There is no firm restriction on vRAM allocation for Enterprise and Enterprise Plus editions. If you use more vRAM than you are enttitled for you will be given a warning.

If you find this post useful please share it with any of the buttons below.

- Currently about 40% of server workload now is running in virtual environment, this number will reach 50% by the end of 2011

- The main point of first part of the presentation was mostly the global move to the cloud computing model - either private or public. Now it is not only virtualizing your physical servers, but providing Virtual Machines AppStore to the clients. Basicallly, VMware is pushing all vSphere’s implementations to IT As A Service model.

- New cloud infrastructure suite now consists of the following products:

- vSphere 5

- vCenter SRM 5

- vCenter operations 1.0

- vShield Security 5

- vCloud Director 1.5

Let’s concentrate on the most important part of vSphere 5 improvements:

- Profile Driven Storage – allows you to create storage tiers or they call it also Datastore cluster. You create for instance 3 different performance specs datastore clusters using flash disks for Tier 1, SAS disks for Tier 2 and FATA disks for Tier 3. When you create VM you just assign it to the proper Datastore Cluster according to the service level requirements of VM. If performance of current VMFS doesn’t meet VM’s requirements the VM will be migrated to better VMFS datastore. To me it seems like a software replacement of the multi tier hardware storage solutions like HP 3Par storages.

- Storage DRS – it is very close to regular DRS. It will also take care of initial placement of VM with regard to available space, you can create your affinity rules for keeping some of the VMs separate across different datastores, it will migrate your VMs according to IO balancing level you set and space allocation rules you create. Finally, you can move your datastore to Maintenance mode so all VMs will be moved to other datastores for the period of maintenance.

- VMFS 5:

- All VMFS datastores are formatted with standard 1MB blocksize

- The new VMFS can grow up to 64TB

- VMDK file is limited by 2 TB

- vSphere 5 support new and old versions of VMFS

- If you want to upgrade to VMFS 5 it is easy and fast to do

- vSphere Storage Appliance – not sure if I got all details correctly. Briefly, it will let you use all local ESXi disks to create kind of virtual shared storage. You don’t need shared storage, but you still can use some benefits of it. But there was nothing told about its limitations and restrictions – something to investigate for me. I guess it is mostly aimed for small vSphere implementations.

- New Hardware Version 8, including 3D graphics and MacOS X server support

- New VM’s maximums - now you can create Monster VM:

- 32 vCPUs

- 1TB of RAM

- 36 Gbps

- 1,000,000 IOPS

- High Availability

- The concept of HA has been changed completely to Master/Slave model with an automated election process. There is now only one Master and the rest of nodes are slaved. Master coordinates all HA actions. With this new model vSphere admins don’t need to worry about HA Active hosts placement and distribution accross blade enclosures

- HA doesn’t rely on DNS anymore

- The big change for HA is that now all nodes will use storage paths and subsystems for communication in addition to network. This will help nodes better understand the health of their neighbor nodes.

- FT VMs still have 1 CPU limit, but probably we can use more than 1 vCore per vCPU with FT VM - have to check. VMware just increased range of FT supported CPUs and Operating Systems.

- Auto Deploy – it is a tool for easy bulk deployment of ESXi hosts. vCenter can keep Image and Host profiles. You can create some rules that will tell vCenter what Image, Host profiles and Cluster to use while installing new host. The bad thing for me about it is that I need to start learning PowerCLI – you need these skills to create auto deployment rules.

- Enhanced Network IO Control – Now it is per Vritual Machine control. In vSphere 4.1 it was per port with vDS.

- Storage IO Control – Added control for NFS storages

- vMotion – finally VMware implemented load balancing of vMotion over several vmotion enabled VMKnics. vMotion is now supported with latency up to 10ms.

- vCenter linux based Virtual Appliance – you can still use vCenter on Windows. Didn’t get what the difference is between those two

- Vcenter SRM 5

- No more requirement for hardware array based replication. It is all done now in software. You can have different storage in your Disaster Recovery site

- Failed VMs can fallback to the main vSphere once it is fully restored

- Proactive migration using SRM – for instance if you expect power outage in your main datastore you can manually initiate failover to DR site

- vCenter operations 1.0 - SLA Monitoring

Here comes the biggest concern of all admins that already built some plans for upgrade to vSphere 5 – licensing!

The licensing is still counted in CPU units. If you have 4 CPUs in 2 ESXi hostss you will ned 4 licenses. The good news is that your CPUs are not limited by number of cores anymore. Another good news is elimination of RAM limit per host.

Now we can proceed with bad news. VMware creates new definition – vRam Entitlement, that is, how much RAM you can assign to your VMs per license. For instance, with 1 license for vSphere 5 Enterprise edition you are entitled to use 32GB of RAM. Even if your host has 48GB of RAM you won’t be able to assign to your virtual machines more than 32GB. You can merge vRAM entitlements into Pooled vRAM, that is, the sum of your vRAM entitlements across all hosts connected to your vCenter or across all linked vCenter instances. Consumed vRAM has to be less than pooled vRAM. Amount of entitled vRAMs differs across different vSphere editions.

Here is another example of pooling vRAM. You have 2 hosts with 2 CPUs and 96 GB of RAM each. With 4 Enterprise Edition licenses your pooled vRAM equals to 128 GB, but you want to use all 192 GB your hosts have. Then you will need to buy 2 more Enterprise edition licenses. Even though you don’t have two more CPU you can use vRAM entitlement that comes together with license per CPU.

As I understood switched off and suspended VMs are not counted in pooled vRAM. I think it will significantly change approach to VM’s memory provisioning and management. I can imagine that some admins will start providing VMs with less RAM than they need trying to cut licensing expenses.

Those companies that have high overcommitment memory will need to pay extra for upgrade to vSphere 5, which makes all memory overcommitment technologies less valuable now. No matter how many GBs you can save using Transparent Page Sharing – you still have to pay for all allocated vRAM.

There is also tool that can help you to calculate your consumed amount of vRAM and give you an idea on how many licenses to buy and what edition to choose.

For owners of Enterprise License Agreement the upgrade process is quite straight forward – just contact VMware, they will get provide licenses to you.

Seems like with new licensing model VMware is more aimed at the market of big companies, where flexibility of pooled vRAM across multiple vCenter instances can save some money. SMB companies will definitely need to pay more for vSphere 5.

Update 1: I should never use MS Word again for blogging. Screwed all my text formatiing.

Update 2: Guys, I will really appreaciate your feedback and comments about the blog content's quality.

Update 3: There is no firm restriction on vRAM allocation for Enterprise and Enterprise Plus editions. If you use more vRAM than you are enttitled for you will be given a warning.

If you find this post useful please share it with any of the buttons below.

Tuesday 5 July 2011

12th of July - Don't miss the vSphere 5 announce

I knew the new version of vSphere would be release before Vmworld 2011, but I didn't realize it is so close. According to the Vmware.com site the presenation of vSphere 5 is scheduled on 12th of July. Don't forget to register for live webcast called "Raising the bar, Part V". To make time go faster you can read the following blog posts about new features in vSphere 5.0 - 1, 2, 3.

I personally like new storage DRS, increased size of VMFS partitions and auto deploy features. I am very eager to read some tech papers on what new technologies of CPU and memory virtualization will be implemented in new ESXi. However, the first books about vSphere 5 are not going to be realesed before September 2011.

The only concern that is not covered in all these rumours about vSphere 5 is if licensing will be changed. I remember that before you only had to have proper support contract in order to upgrade to vSphere 4. If it is still the same, I guess we will be the one of the first companies to upgrade our virtual farms. At least, I can promise I will not rest until this happens. I feel like I am 14 again and waiting for new version of Civilization to be released :)

one week to go!

I personally like new storage DRS, increased size of VMFS partitions and auto deploy features. I am very eager to read some tech papers on what new technologies of CPU and memory virtualization will be implemented in new ESXi. However, the first books about vSphere 5 are not going to be realesed before September 2011.

The only concern that is not covered in all these rumours about vSphere 5 is if licensing will be changed. I remember that before you only had to have proper support contract in order to upgrade to vSphere 4. If it is still the same, I guess we will be the one of the first companies to upgrade our virtual farms. At least, I can promise I will not rest until this happens. I feel like I am 14 again and waiting for new version of Civilization to be released :)

one week to go!

Why Virtual Standard Switch (vSS) doesn't need Spanning Tree Protocol

Today I want to wirte down new things I have learnt recently about vNetwork Standard Switch in vSphere 4.1 and why it doesn't need Spanning Tree protocol.

I assume you already have basic knowledge about switching, vlans, switching loops, Spanning tree protocol and any type of link aggregation protocols. I will go very quickly through main features of standard vSwitches focusing on facts that are not very obvious from official documentation, at least for me. Generally speaking, this article will be more useful for people that already has some experience with vSphere networking.

The main goal of standard vSwitch is to provide connectivity between your virtual machines and physical network infrastructure. Additionaly, it provides logical division of your VMs with PortGroups, offers different Load Balancing algorithms in case you have more that one uplink, supplies egress traffic shaping tool (from VMs to physical switches) and finally, provides Network Uplink failover detection.

I assume you already have basic knowledge about switching, vlans, switching loops, Spanning tree protocol and any type of link aggregation protocols. I will go very quickly through main features of standard vSwitches focusing on facts that are not very obvious from official documentation, at least for me. Generally speaking, this article will be more useful for people that already has some experience with vSphere networking.

The main goal of standard vSwitch is to provide connectivity between your virtual machines and physical network infrastructure. Additionaly, it provides logical division of your VMs with PortGroups, offers different Load Balancing algorithms in case you have more that one uplink, supplies egress traffic shaping tool (from VMs to physical switches) and finally, provides Network Uplink failover detection.

Saturday 25 June 2011

HP Virtual Connect Firmware upgrade procedure

Last Sunday I have upgraded all firmware in our 2 Blades Enclosure 7000 to the latest release set 9.30. The process itself is not difficult, but it includes many small details and is very important as these blades are production environment, so I decided to write down step by step procedure. I guess it will save my time for the next firmware upgrade, might be helpful for someone of you. I also would like to share couple of problems I encountered during upgrade.

Here we go

Here we go

Monday 6 June 2011

vSphere Transparent Page Sharing (TPS)

Every time I learn something new about Transparent Page Sharing (TPS) I get the same excitement level I got when I was teenager and was reading science fiction books about future worlds and technologies. So when you finish reading this post ask yourself - How cool is TPS? :)

So what is TPS about?

The main goal of TPS is to save memory and, thus, to give us possibility of providing more memory to your virtual machines than you physical host has. This is called memory overcommitment.

Wednesday 1 June 2011

Basics of Memory Management - Part 2

In my previous article I quickly went through the main elements and logic of the memory management. You will definitely want to read it before you start reading this article. Today I would like to go deeper into the memory management principles, and particularly of the vSphere memory.

Just to recap the essentials of the previous article - there are 2 main elements of Memory Management Unit (MMU): Page Table Walker and Translate Lookaside Buffer. When an application sends a request to specific memory address it uses Virtual Address (VA) of memory. MMU has to walk through Page Table, find the corresponding Physical Address (PA) translation for VA and put this pair (VA -- PA) to TLB. This will speed up the consequent memory accesses to the same memory address areas. Since Physical Address (PA) of Virtual Machine is still a virtual address there is need to additional translation layer between PA and actual memory address of the ESX host, that is Host Address (HA).

Thursday 26 May 2011

Basics of Memory Management

Reading all these article in Wikipedia and different blogs about memory management technologies put me to shame. I literally didn't know anything about it and would probably never find it out if I stayed pure networking guy. Since I want to go a bit deeper into vSphere memory management (Memory Virtiualizaion, Virtual MMU, TSP) in the my next articles, today I need to build a foundation on top of which I can put additional layers later. I will try to explain new stuff in the same way I would like it to be explained to me one week ago.

I need to start with explanations of some definitions and basic principles of memory management.

Mostly all modern applications/OS work with virtual memory. None of them has any idea where its actual memory is located. Each process truly believes that it has it own address space.

Tuesday 24 May 2011

NUMA - Non Unified Memory Access

Last two days I spent quite a lot of time on reading absolutely new topics for me - NUMA, Transparent Page Sharing, TLB, Large Pages, Page Table, and I hope within this week I will make several posts on each of these technologies and how they work together. I have done some tests playing around with Large Pages in ESXi and would like to share this information as well.

I want to start with an explanation about what the NUMA is and to what extent ESX is NUMA-aware. There is a huge amount of information about NUMA in the Internet and probably you are already aware about it, so please let me know if I made any mistakes in my topic.

NUMA stands for Non Unified Memory Access and has nothing to do with Romanian music band and its song "Numa, Numa, yeah". Currently it is presented in Intel Nehalem and AMD Opteron processors. I always assumed that CPUs equally share memory, however this is not the case with NUMA.

Tuesday 17 May 2011

Virtual MS Failover Cluster migration between storages

Recently we had a task for full data migraiton from old HP MSA 2312 FC to HP EVA 6400. The MSA was used mostly for VSphere and hosted several VMFS datastores. Basically, the whole procedure for migration was quite simple though it had to be done very carefully.

However, we faced a challenge. I have had experience on migration of VMs between storages and VSpheres at the same time (and even the between different versions - 3.5 and 4.1), and basically I might easily make a draft migration plan. However this time there were 2 Production Virtual-Virtual MS Failover Clusters running in that VSphere. One failover cluster was running MS Windows 2003 and Exchange Server. Another cluster was running Windows 2008 Failover Cluster and SQL Cluster. Both clusters were using RDM disks in Physical Compatibility mode. Since services running on these cluster were quite important for we couldn't have long downtime.

However, we faced a challenge. I have had experience on migration of VMs between storages and VSpheres at the same time (and even the between different versions - 3.5 and 4.1), and basically I might easily make a draft migration plan. However this time there were 2 Production Virtual-Virtual MS Failover Clusters running in that VSphere. One failover cluster was running MS Windows 2003 and Exchange Server. Another cluster was running Windows 2008 Failover Cluster and SQL Cluster. Both clusters were using RDM disks in Physical Compatibility mode. Since services running on these cluster were quite important for we couldn't have long downtime.

Thursday 12 May 2011

Introduction

Hello to everyone.

My name is Askar Kopbayev. I work as Network & Infrastructure Cooridnator in Agip KCO and this is my first blog ever. I am planning to write mostly about VMware, for the simple reason that it is my favourite technology nowadays, and my preparation to VCAP-DCA exam, nevertheless I will probably post more about networking, storage and other topics I work with.

I have been thinking about blogging for a quite long time, however, I couldn't find appropriate theme that would be interesting to write about. Last week I had a trip to Madrid to HP Technology&Work on Tour event (I will definitely write about it in my next posts) and during one of the presentation I understood couple of things:

1. I want to be Datacenter Administrator which means I want to be storage, networking, server administrator. I want to be involved to all activities about data centers.

2. Blogging might help me trace my own progress towards VCAP and also help on structuring the new thing I learn. You all know how clear things become when you explain them to another person.

My experience includes 5 years of networking experience with Cisco products (CCNP, CCSP), some Microsoft skills and qualifications, which are quite obsolete - MCSE 2000, and half a year of Vsphere experience along with VCP certification.

Btw, my mother tongue is russian so you can always write me or comment in russian as well.

Ciao,

Askar

My name is Askar Kopbayev. I work as Network & Infrastructure Cooridnator in Agip KCO and this is my first blog ever. I am planning to write mostly about VMware, for the simple reason that it is my favourite technology nowadays, and my preparation to VCAP-DCA exam, nevertheless I will probably post more about networking, storage and other topics I work with.

I have been thinking about blogging for a quite long time, however, I couldn't find appropriate theme that would be interesting to write about. Last week I had a trip to Madrid to HP Technology&Work on Tour event (I will definitely write about it in my next posts) and during one of the presentation I understood couple of things:

1. I want to be Datacenter Administrator which means I want to be storage, networking, server administrator. I want to be involved to all activities about data centers.

2. Blogging might help me trace my own progress towards VCAP and also help on structuring the new thing I learn. You all know how clear things become when you explain them to another person.

My experience includes 5 years of networking experience with Cisco products (CCNP, CCSP), some Microsoft skills and qualifications, which are quite obsolete - MCSE 2000, and half a year of Vsphere experience along with VCP certification.

Btw, my mother tongue is russian so you can always write me or comment in russian as well.

Ciao,

Askar

Subscribe to:

Posts (Atom)